Description

Save EDIFACT records + create Invoices

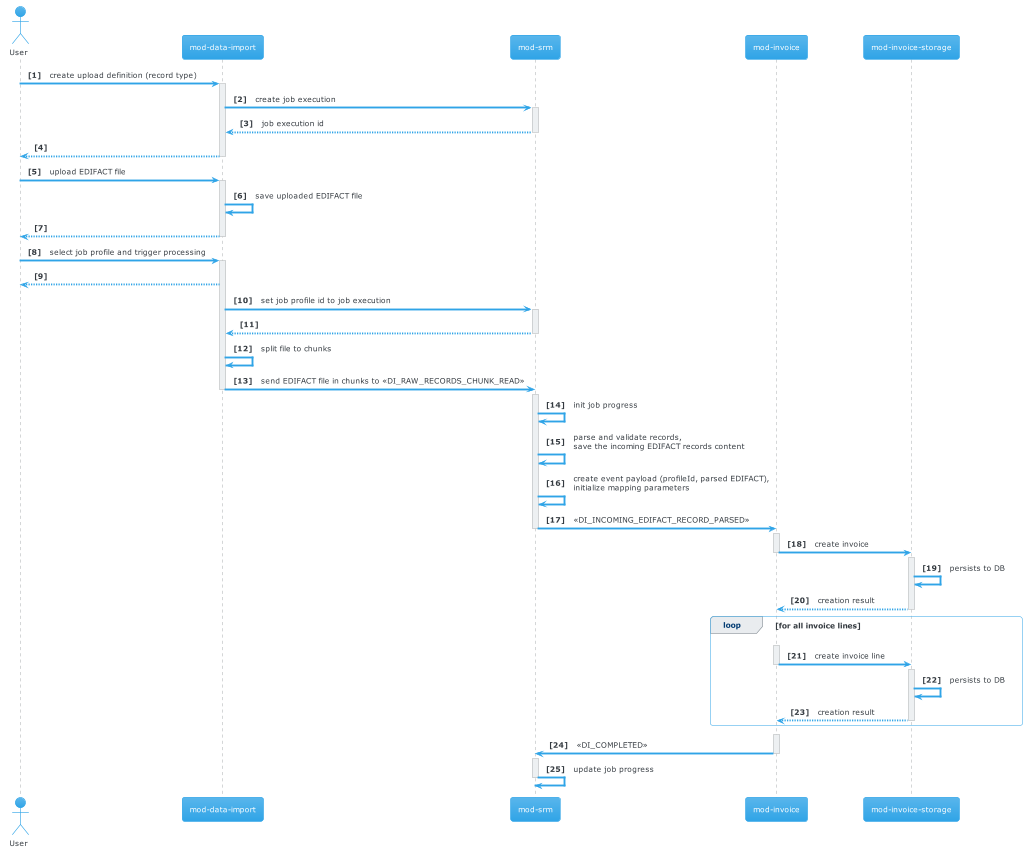

Flow (updated with changes made in Quesnelia R1 2024)

- [1-4] UploadDefinition is created for importing files in mod-data-import

- [2-3] JobExecution is created in mod-srm

- [5] EDIFACT files are uploaded from WEB client to mod-data-import

- [6] Uploaded file is saved chunk by chunk in mod-data-import

- [8-9] User selects the Job Profile and initiates the processing of the uploaded file

- [10-11] The job profile ID is set for JobExecution

- [12-13] EDIFACT file is split into batches of EDIFACT records and put to Kafka queue DI_RAW_RECORDS_CHUNK_READ

- [14-15] mod-srm reads batches from the DI_RAW_RECORDS_CHUNK_READ queue, parses EDIFACT records, and saves the incoming records to the DB to provide them for data import logs. JobExecution is set to IN_PROGRESS status on first chunk received.

- [16-17] mod-srm reads the profile and creates JSON payload (containing parsed EDIFACT ) for processing. Sends it to an appropriate Kafka queue (one message per EDIFACT record) - DI_INCOMING_EDIFACT_RECORD_PARSED

- [18-20] mod-invoice reads the message maps invoice and creates it via HTTP in mod-invoice-storage

- [21-23] mod-invoice saves each invoice lines via HTTP in mod-invoice-storage

- [24] in case of a successful invoice creation mod-invoice issues DI_COMPLETED event

- [25] mod-srm receives DI_COMPLETED event and marks JobExecution status as "COMMITTED"

Diagram (updated with changes made in Quesnelia R1 2024)

Flow

- JobDefinition (uuid, profile - defines job type: insert/update) for import created in mod-srm

- EDIFACT file + JobDefinition ID are uploaded from WEB client to mod-data-import (stored in memory, can be persisted. possible oom)

- EDIFACT records are packed into batches and put to Kafka queue DI_RAW_RECORDS_CHUNK_READ

- mod-srm reads batches from the queue, validates and passes to mod-srs via Kafka queue DI_RAW_RECORDS_CHUNK_PARSED. JobStarts on first chunk received.

- mod-srs stores records into PostgreSQL database and returns the result back via Kafka queue (broken records are also stored as 'error record') - DI_PARSED_RECORDS_CHUNK_SAVED

- mod-srm reads the profile and creates JSON payload (containing parsed EDIFACT, profile, mapping parameters) for processing. exports it to an appropriate Kafka queue - DI_EDIFACT_RECORD_CREATED

- mod-invoice DI_EDIFACT_RECORD_CREATED