...

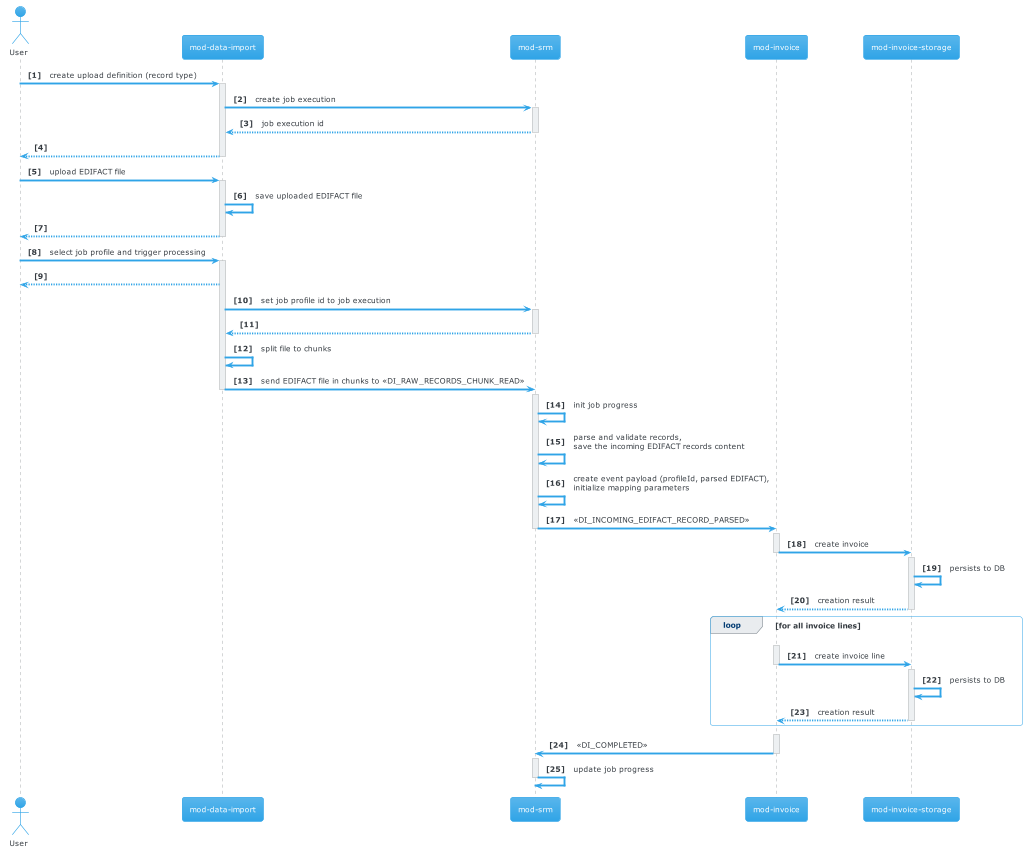

Diagram (updated with changes made in Quesnelia R1 2024)

Flow

- JobDefinition (uuid, profile - defines job type: insert/update) for import created in mod-srm

- EDIFACT file + JobDefinition ID are uploaded from WEB client to mod-data-import (stored in memory, can be persisted. possible oom)

- EDIFACT records are packed into batches and put to Kafka queue DI_RAW_RECORDS_CHUNK_READ

- mod-srm reads batches from the queue, validates and passes to mod-srs via Kafka queue DI_RAW_RECORDS_CHUNK_PARSED. JobStarts on first chunk received.

- mod-srs stores records into PostgreSQL database and returns the result back via Kafka queue (broken records are also stored as 'error record') - DI_PARSED_RECORDS_CHUNK_SAVED

- mod-srm reads the profile and creates JSON payload (containing parsed EDIFACT, profile, mapping parameters) for processing. exports it to an appropriate Kafka queue - DI_EDIFACT_RECORD_CREATED

- mod-invoice DI_EDIFACT_RECORD_CREATED

...