Folio logging solution

Problem statement

There should be platform wide solution for logging aggregation in order to quickly identify and find the root causes of issues

Log entries for the same business transaction in different microservices should have request id in order to find all log entries for the same business transaction

There should be configurable alerting in order to get information about new the issues as soon as possible (is supposed to be handled by hosting provider)

Current state

Okapi aggregates logs from all applications into single file.

Logging Solution

Java logging library

Logging java library Log4j2 was initially used for implementation of core modules. All other java modules should use Log4j2 for logging to provide seamless integration of common logging approach.

Logging facilities provided by RMB

In order to include request information RMB based modules will be able to leverage FolioLoggingContext after implementation of RMB-709 - Getting issue details... STATUS (which is blocked by Vert.x 4 release and migration). For now this is implemented only in OKAPI.

In case of non-RMB java modules the Mapped Diagnostic Context (MDC) can be leveraged in cases when it is possible. There should be implementation of mechanism in order to supply request headers to MDC. (e.g. MDC.put("requestId", "some-id");)

When user request routed to multiple, different microservices and something goes wrong and a request fails, this request-id that will be included with every log message and allow request tracking.

Usage of Log4j 2 Layouts

An Appender in Log4j 2 uses a Layout to format a LogEvent into a form that meets the needs of whatever will be consuming the log event. The library provides a complete set of possible Layout implementations. It is recommended to use either Pattern or JSON layouts in FOLIO backend modules. By default, the Pattern layout should be used for development, testing, and production environments. In the cases when log aggregators are used in particular environments, the usage of JSON layout should be enabled explicitly for all backend modules by the support/deployment teams.

All FOLIO backend modules must provide configuration property files for both layouts (Pattern and JSON). In addition, recommended default logging configurations are provided by the respective libraries (folio-spring-base, RMB).

Default configuration for JSON Layout

For any logging aggregation solution json format of logs is preferable over plain text in terms of performance and simplicity of parsing. All Folio modules should have the same json format for logs, e.g. the following:

For Spring Way modules https://github.com/folio-org/folio-spring-base/blob/master/src/main/resources/log4j2-json.properties

For RMB modules https://github.com/folio-org/raml-module-builder/blob/master/domain-models-runtime/src/main/resources/log4j2-json.properties

Default configuration for Pattern Layout

For Spring Way modules https://github.com/folio-org/folio-spring-base/blob/master/src/main/resources/log4j2.properties

For RMB modules https://github.com/folio-org/raml-module-builder/blob/master/domain-models-runtime/src/main/resources/log4j2.properties

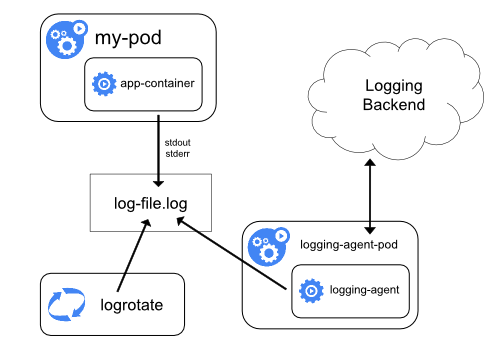

Logging aggregation stack

The deployment and configuration of logging aggregation stack is responsibility of hosting provider. See below the example of configuration for logging stack using EFK: