Goal of the Test scenario - create a use case which is common to be used in the application and should be completed in user friendly time frame (data size and complexity of the request could influence on time frame)

1. Install open-source Blazemeter Chrome plugin

The plugin can be downloaded from https://chrome.google.com/webstore/detail/blazemeter-the-continuous/mbopgmdnpcbohhpnfglgohlbhfongabi/related

You will have to sign up to start using it. Sign-up is free.

No need to pass the TEST of any application. (save your time))

2. Use screen recording tool to record all your steps of the scenario

Screen recording should be started before you start recording with the chrome plugin. Use screen recording tool you are comfortable with or the one suggested below.

You do not need to record the data preparation process for the scenario if it takes much longer than the scenario it self.

Windows tools for screen recording: https://getsharex.com/

Mac OS tool: native quick time

3. Setup data for the scenario (if the scenario could not be done on regular data setup of snapshot/testing/bugfest ENV)

4. Go through the scenario on the selected ENV to be sure that during the recording there will no be issues related to Data Set or others

5. Start recording the Screen with selected tool

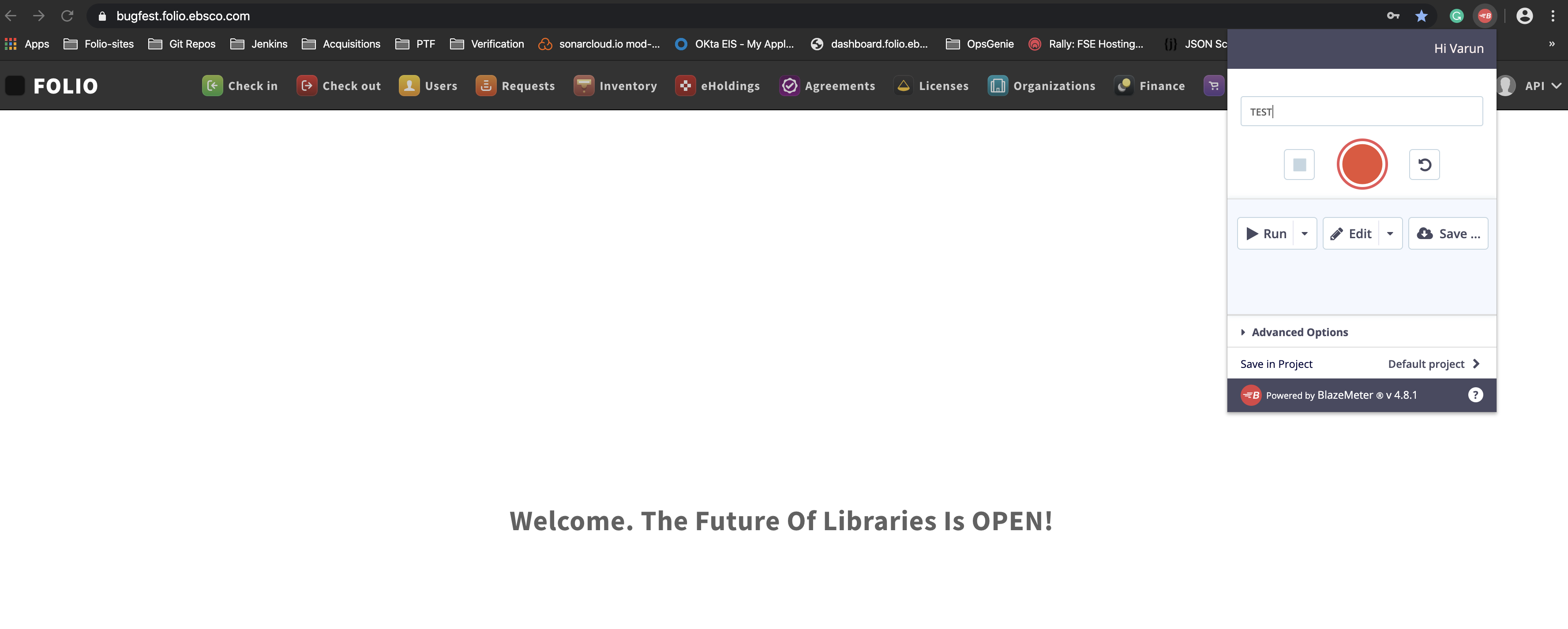

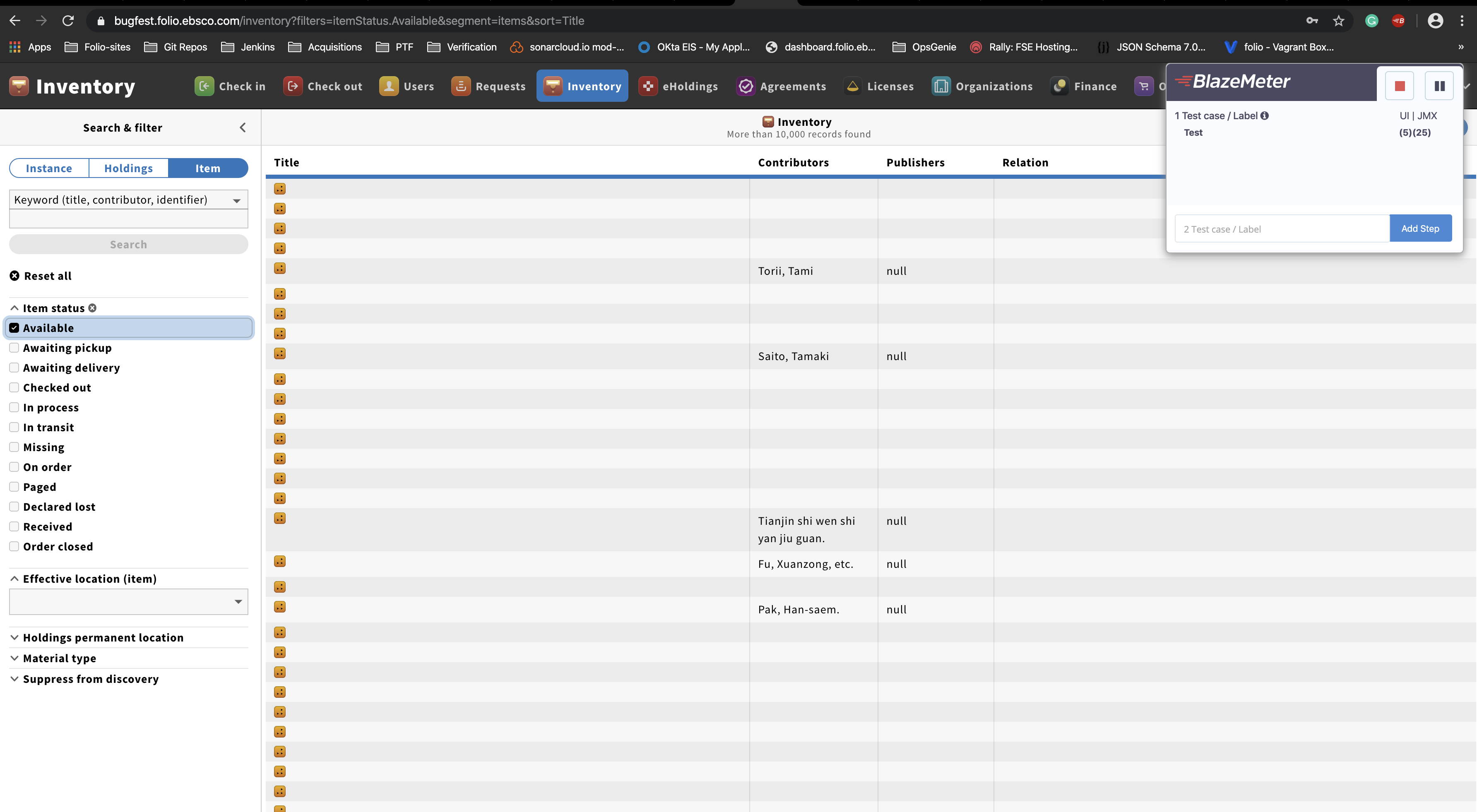

6. Start recording scenario with the Chrome Plugin

Type short name of your scenario, like: searching certain book on Spanish language (name of the scenario should give high level understanding of the scenario)

Start recording: execute the workflow

Stop recording scenario.

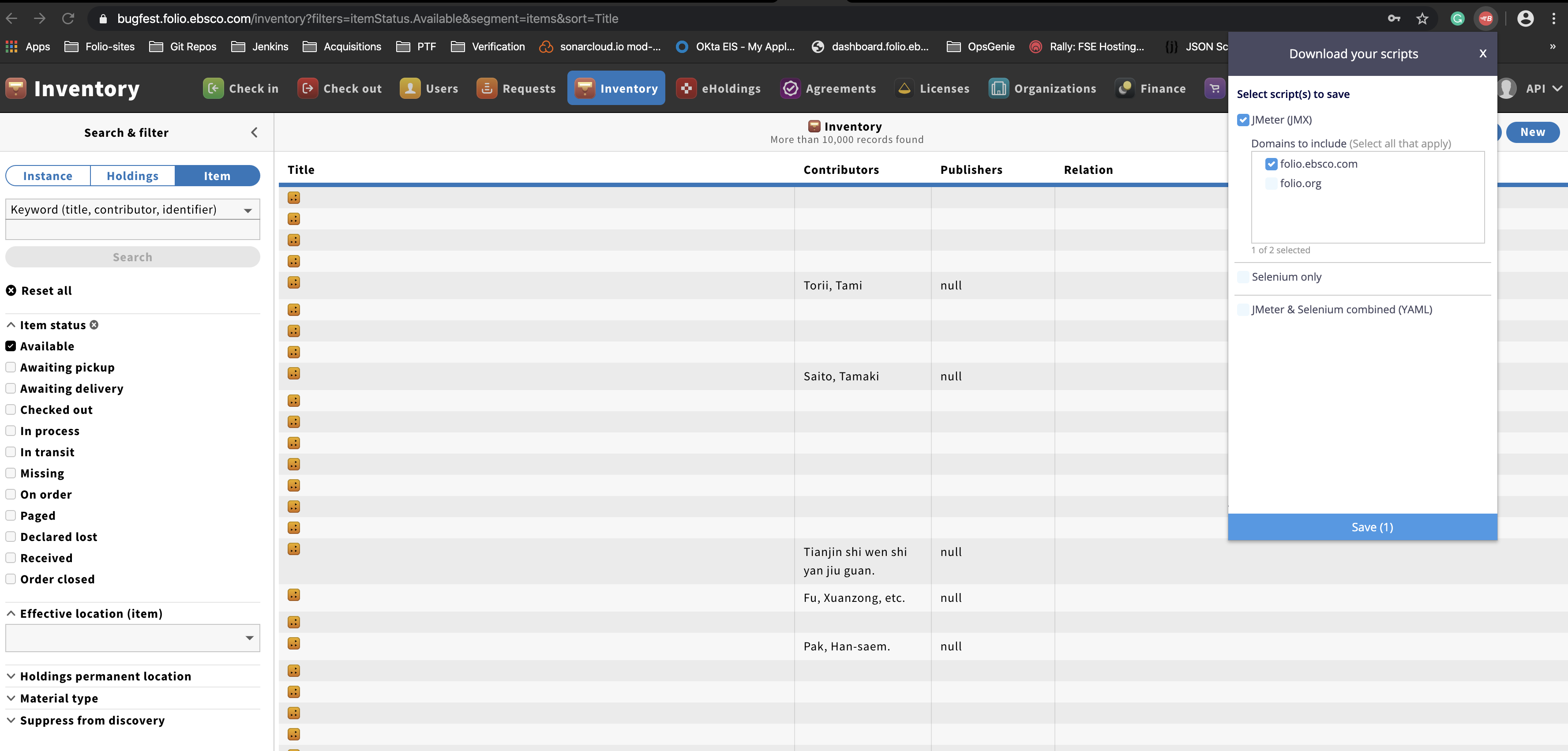

"Save" the recorded scenario to JMX file:

7. JIRA Flows

Create a user story on the appropriate JIRA board of the module (e.g., mod-inventory-storage) for a dev team to investigate, see section 8 below.

Assign the user story to a dev team. The story is tagged with PTF-Review and add Sergiy as a watcher so if there are technical issues PTF can be brought into the process early.

PTF is flagged when the JMeter script is ready for PTF by tagging Sergiy in the comments. PTF clones the story and moves it to their PERF project while linking to the original ticket. PTF investigates and tests.

If there is a solution, PTF creates a task for the original dev team to execute, linking to PERF and original tickets.

8. JIRA Tickets

Name of the Jira issue should be similar as Name for the Test Scenario, no need exact match - High level description of the scenario

Description should contains:

- App name/area of issue

- Brief statement of issue

- How many users at any given time

- Volume of data

- Expected response time

- Main modules that are involved in the process (if obvious or if known)

- Any specific settings or items or scenarios (ex: exporting MARC bib records was going on while doing a check-in, or a checking out an item that has 10 requests attached to it)

- A list of the backend software modules (to obtain this list, go to Settings → Software versions, and copy & paste the middle column "Okapi Services" into a file and attach it to the JIRA. Another way to do this is while doing step #5, go to this page and scroll down slowly so that the versions are captured on the video)

Add the priority for the task

Add notes when you expect the scenario should be tested level month

Attach Scripted Scenario from the chrome plugin to the Jira issue

Attach recorded Screen video to the Jira issue.